2025 AIChE Annual Meeting

(594f) Koopman Operators in Weighted Function Spaces for the Estimation of Lyapunov Functions and Domain of Attraction

Author

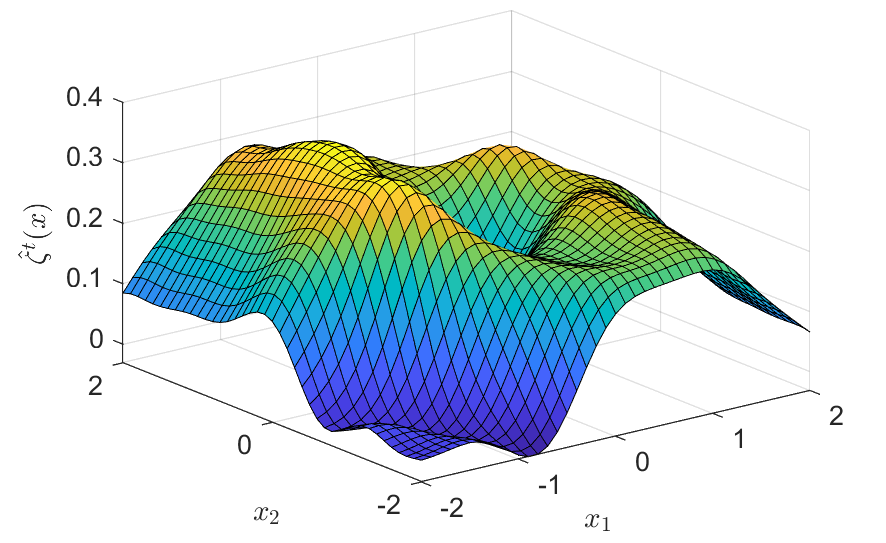

From a control point-of-view, what is needed to achieve by the linearization of the nonlinear dynamics is more than just an approximate prediction of the observable value in one time step ahead. Instead, when the system model is transformed from its original underlying nonlinear one to a Koopman linearized form and estimated from data, the stability properties of the system must be approximately preserved and recoverable. This work therefore consider two major properties – (i) Lyapunov functions, assuming that the dynamics is globally asymptotically stable, and (ii) Zubov functions that are related to the domain of attraction, assuming that the dynamics is locally attractive. In the latter case, the Koopman operator is modified as the so-called Zubov-Koopman operators [5]. Essentially, the estimation of Lyapunov and Zubov functions require that the data-based estimation of the Koopman dynamics can be used for predictions over arbitrarily long time horizons.

Key to such estimation is the guarantee of the Koopman operator, or the linearizing operator in scope, to be strictly contractive. Otherwise, the prediction error cannot be bounded uniformly over time. Existing methods to impose contraction restrictions is to allow the finite-rank approximation of the Koopman operator to be chosen only within Schur-Hurwitz matrices [6]. In this work, we instead use a notion of a space of weighted continuous functions and a corresponding weighted reproducing kernel Hilbert space (wRKHS), referring to the class of functions in the form of w·g where g belongs to the continuous class or RKHS and w is the weighting function.

- The weighting function is assigned according to the side information (prior knowledge) on the convergence rate of the system to the origin. This requires neither an accurate first-principles model nor detailed calculation of the local linearization (e.g., the eigenvalues of the Jacobian [7]), and hence is easily attainable.

- We define the Koopman or Zubov-Koopman operator on the weighted RKHS, which is dense in the weighted continuous function space. Then we consider the learning problem of the Koopman operator formulated as a convex optimization one. The routine of this learning is the same as that of [3], but only interpreting the norm and inner product in the newly defined weighted spaces.

- Using the existing statistical learning theory, we establish a generalization error bound of the learned Koopman or Zubov-Koopman operator in the weighted RKHS.

Hence, we rewrite the Lyapunov function as a solution to a kernel-based algebraic Lyapunov equation, which has a solution in an infinite series form involving the Koopman operator and the canonical features of the states. Due to the contractiveness of the Koopman operator in the weighted space, canonical features decay exponentially. Thus, with a data-based estimation whose error is statistically bounded, the Lyapunov function is estimated with a bounded error. The same technical treatment is performed on the Zubov-Koopman operator for Zubov function estimation, yielding a restricted error in the estimation in the domain of attraction.

References

[1] S. L. Brunton, M. Budišić, E. Kaiser, and J. N. Kutz, “Modern Koopman theory for dynamical systems,” SIAM Rev., vol. 64, no. 2, 229–340.

[2] M. O. Williams, I. G. Kevrekidis, and C. W. Rowley, “A data-driven approximation of the Koopman operator: Extending dynamic mode decomposition,” J. Nonlin. Sci., vol. 25, pp. 1307–1346, 2015.

[3] V. Kostic, P. Novelli, A. Maurer, C. Ciliberto, L. Rosasco, and M. Pontil, “Learning dynamical systems via Koopman operator regression in reproducing kernel Hilbert spaces,” Adv. Neur. Inform. Proc. Syst., vol. 35, pp. 4017–4031, 2022.

[4] B. Lusch, J. N. Kutz, and S. L. Brunton, “Deep learning for universal linear embeddings of nonlinear dynamics,” Nat. Commun., vol. 9, no. 1, p. 4950, 2018.

[5] Y. Meng, R. Zhou, and J. Liu, “Learning regions of attraction in unknown dynamical systems via Zubov-Koopman lifting: Regularities and convergence,” arXiv preprint, 2023. arXiv:2311.15119.

[6] F. Fan, B. Yi, D. Rye, G. Shi, and I. R. Manchester, “Learning stable Koopman embeddings,” in American Control Conference (ACC), pp. 2742–2747, IEEE, 2022.

[7] W. Tang, “Data-driven bifurcation analysis via learning of homeomorphism,” in 6th Annual Learning for Dynamics & Control Conference, pp. 1149–1160, PMLR, 2024.