2025 AIChE Annual Meeting

(645e) Knowledge Graph-Enhanced Large Language Model for Intelligent HAZOP Conclusions Generation

Authors

Chemical process safety remains a critical global concern, with deficiencies in Hazard and Operability (HAZOP) analysis reports identified as key contributors to major industrial accidents. Traditional HAZOP methods rely heavily on subjective expert judgment, making the process inherently labor-intensive and time-consuming. Although various intelligent HAZOP analysis systems, such as OptHAZOP (Khan and Abbasi, 1997a), PHA Suite (Zhao et al., 2005), and PetroHAZOP (Zhao et al., 2009), have emerged to address these challenges, they lack the adaptability required to generate targeted conclusions for novel or customized processes. In response, recent advancements in Large Language Models (LLMs), notable for their sophisticated textual generation and knowledge reasoning capabilities, present new opportunities for personalized and automated HAZOP analyses. However, a significant barrier remains: complex industrial processes cannot be effectively represented solely through natural language, limiting the reasoning efficiency of LLMs. To bridge this gap, we propose structuring multi-source industrial process data using knowledge graphs, effectively encoding essential information including process topology, control structures, and the reasoning logic inherent in traditional HAZOP analyses. Based on this structured representation, we introduce Deep-HAZOP, a novel framework designed to automatically extract and integrate diverse process safety information into a comprehensive knowledge graph. Specifically, Deep-HAZOP employs LLMs to first construct explicit reasoning logic chains derived from the knowledge graph, and subsequently leverages these chains to generate customized HAZOP analysis conclusions for new processes.

Ⅱ Methodology

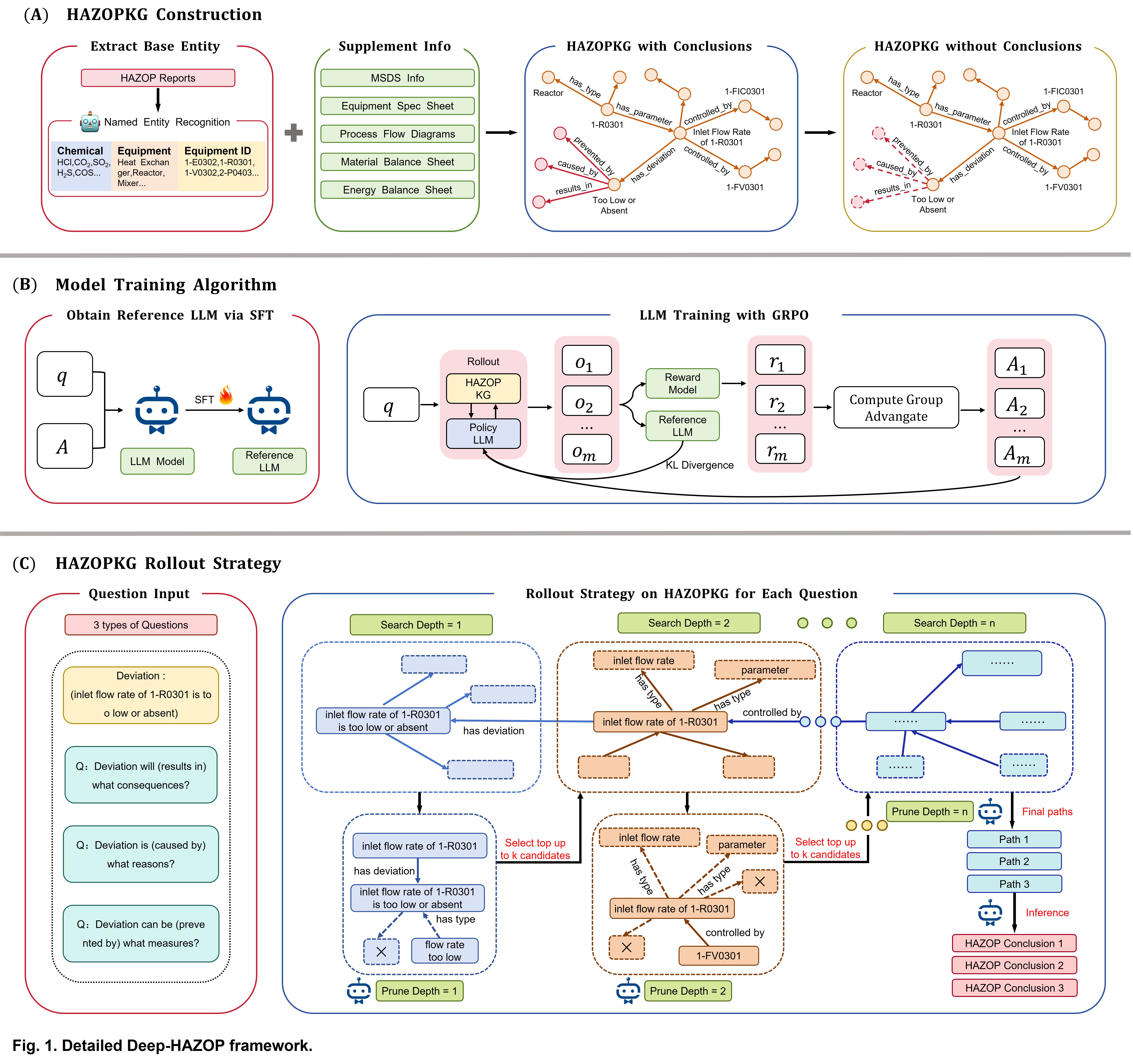

The Deep-HAZOP framework is illustrated in Fig. 1, with elaboration on three components: (A) System information collection and HAZOPKG construction; (B) Model training algorithms and policy update methods; (C) Rollout processes between HAZOPKG and policy LLM.

A. HAZOPKG Construction

The construction of the HAZOP Knowledge Graph (HAZOPKG) involves several systematic steps to integrate multi-source process safety information. Initially, a predefined HAZOP ontology explicitly defines essential concepts and relationships, establishing the foundation for the integration of data from multiple process safety sources, including piping and instrumentation diagrams (P&ID), process flow diagrams (PFD), material balance tables, equipment selection documents, and Material Safety Data Sheets (MSDS).

Subsequently, Transformer-based algorithms parse Computer Aided Design (CAD)-based P&IDs to extract critical process information such as equipment identifiers, instrumentation, valve details, and connectivity relations, as well as control structures between equipment. Similarly, PFDs are processed to identify stream connectivity and flow directionality among equipment units. These extracted elements collectively facilitate the generation of a chemical process topology graph, comprising equipment nodes and stream nodes, clearly representing inter-equipment connections and flow directions.

Based on this topology, equipment nodes are enriched by matching their identifiers with equipment selection tables, thereby integrating detailed equipment attributes (e.g., type, model, operational properties). Likewise, stream nodes are augmented using material balance information, incorporating key stream properties such as chemical composition, temperature, pressure, and flow rates. Furthermore, chemical safety attributes—including physicochemical properties and hazard characteristics—are extracted from MSDS documents and integrated into the knowledge graph.

Finally, structured information from existing HAZOP analyses is incorporated to create a comprehensive HAZOPKG. Specifically, a Bidirectional Encoder Representations from Transformers-Conditional Random Field (BERT-CRF) named entity recognition model systematically extracts deviation events, their corresponding equipment, instruments, streams, causal factors, consequences, and protective measures from textual descriptions in HAZOP reports. This structured event data is then merged with the initial knowledge graph, resulting in a robust, integrated, and comprehensive HAZOPKG.

B. Model Training Algorithm

The Deep-HAZOP framework employs Grouped Policy Optimization (GRPO) as its reinforcement learning algorithm for LLM parameter updates, enhancing training efficiency and model performance through two components: a reference LLM baseline and grouped advantage computation. The principal advantages of GRPO over conventional supervised fine-tuning (SFT) stem from its dynamic policy adaptation mechanism and systematic integration with domain-specific knowledge graphs.

The training pipeline initiates with reference model initialization through SFT on structured knowledge graph embeddings. Each training instance consists of a deviation-based query requiring HAZOP analysis and a corresponding answer containing both logical reasoning chains and final safety conclusions. This SFT phase establishes a stable policy baseline for subsequent reinforcement learning phases.

GRPO's architectural innovations manifest in three aspects: 1) Competitive group optimization through parallel response generation, where the policy LLM produces multiple candidate solutions (O₁-Oₘ) per query; 2) Dual-criteria reward modeling combining path selection validity and conclusion accuracy; 3) Normalized advantage computation that transforms absolute rewards into relative rankings, shifting optimization focus from reward magnitude to response quality differentiation. This grouped comparison mechanism simultaneously resolves SFT's diversity limitations from static data fitting and stabilizes training by reducing reward scale sensitivity. The policy update process incorporates both KL-divergence regularization against the reference model and advantage-weighted gradient adjustments.

Through iterative generate-evaluate-compare cycles, GRPO achieves synergistic integration of LLM generative capacity with knowledge graph constraints, maintaining SFT's training stability while enabling enhanced exploration efficiency through structured reinforcement learning. The framework demonstrates particular effectiveness in resource-constrained safety engineering applications requiring rigorous reasoning verification.

C. HAZOPKG Rollout Strategy

To enable comprehensive interaction with the HAZOPKG for scenario-specific information retrieval and reasoning enhancement, we implement a search-prune rollout strategy for the policy LLM. This framework executes structured query processing through the following operational phases:

- Search Initialization: Anchor the input deviation (e.g., "inlet flow rate of 1-R0301 is too low or absent") as root node S(1,1). Extract all one-hop connected triples from the KG as candidate logic paths {P(1,1), P(1,2),...,P(1,m)}, recording this process as Search Depth=1.

- Pruning Optimization: Propagate all candidate paths to the policy LLM for latent relationship analysis. Retain up to top-k most HAZOP-relevant candidates {C(1,1),...,C(1,k)} through attention-weighted selection, appending them to the logic trajectory. Document this phase as Prune Depth=1.

- Iterative Execution: Recursively perform n-level search operations using prior pruning outputs as new anchor nodes. Each cycle maintains strict depth progression (Search Depth=i Prune Depth=i) until reaching maximum depth n.

- Trajectory Synthesis: Upon completing n pruning iterations, aggregate final logic trajectories from all depth levels. Process these through the LLM for multi-path HAZOP conclusion synthesis, ensuring comprehensive conclusion generation.

This depth-constrained search mechanism balances exploration breadth with computational efficiency, systematically expanding reasoning paths while maintaining KG-guided relevance through iterative pruning operations.

Ⅲ Results

To validate the effectiveness of our proposed Deep-HAZOP framework, we conducted a case study using a specifically curated dataset (Li and Zhao, 2024), which consists of 19 process design projects related to deep desulfurization. The resulting HAZOPKG contains 2988 entities, 72 relationship types, and 7867 triples. It explicitly includes detailed information on 36 chemicals, 133 equipment items, 165 process streams, 885 causes, 651 consequences, 945 safeguards, 176 control instruments, and 159 measurement instruments. Deep-HAZOP employs Qwen 2.5 3B-instruct as its foundational LLM, selected for its demonstrated proficiency in instruction comprehension and task execution critical attributes for our application. To validate operational efficacy, we present a representative case study analyzing the deviation "inlet flow rate of 1-R0301 is too low or absent" and its preventive measures inquiry. The framework's reasoning process is visualized through tracing both reasoning path construction and conclusion synthesis phases. Our analysis reveals the model exhibits robust capabilities in context-aware triple-path selection and scenario-specific deduction, demonstrating marked potential for generating process-adaptive HAZOP conclusions through structured knowledge integration.

Ⅳ Implication

The limitations of conventional HAZOP analysis - subjectivity and labor intensity - combined with automated systems' inadequacy for emerging industrial processes, drive the need for knowledge-enhanced methodologies. This study presents Deep-HAZOP, integrating HAZOPKG with LLM to resolve these challenges. The knowledge graph organizes process topology, control architectures, and historical reasoning patterns, overcoming natural language limitations in complex industrial contexts.

The framework implements three phases: 1) Automated KG construction 2) GRPO training combining KG constraints through iterative generation-assessment cycles 3) KG-guided reasoning through search-prune expansion. Depth-constrained mechanisms optimize exploration efficiency while maintaining KG relevance via progressive pruning. GRPO harmonizes LLM outputs with KG structure through structured reinforcement learning. Experimental results confirm the model's competency in triple-path selection and contextual deduction, demonstrating adaptive analytical capabilities for process-specific conclusions.

Moreover, future research should prioritize dynamic KG updates for adaptive mechanisms and inference performance. Concurrently, path consolidation through attribute embedding will transform redundant pathways into intrinsic node properties, streamlining analytical integration.

References

- Khan, F., & Abbasi, S. A. (1997a). OptHAZOP: an effective and optimum approach for HAZOP study. Journal of Loss Prevention in the Process Industries, 10(3), 191–204. https://doi.org/10.1016/S0950-4230(97)00002-8

- Zhao, C., Bhushan, M., & Venkatasubramanian, V. (2005). PHASuite: An Automated HAZOP Analysis Tool for Chemical Processes: Part I: Knowledge Engineering Framework. Process Safety and Environmental Protection, 83(6), 509–532. https://doi.org/10.1205/psep.04055

- Zhao, J., Cui, L., Zhao, L., Qiu, T., & Chen, B. (2009). Learning HAZOP expert system by case-based reasoning and ontology. Computers and Chemical Engineering, 33(1), 371–378. https://doi.org/10.1016/j.compchemeng.2008.10.006

- Li, Z., & Zhao, J. (2025). HAZOPCT: A HAZOP Analysis Completeness Tool based on Knowledge Graph Reasoning. Process Safety and Environmental Protection, 107025. https://doi.org/10.1016/j.psep.2025.107025