2025 AIChE Annual Meeting

(394k) Bridging Computer Vision and Chemical Experimentation for Automated Observation

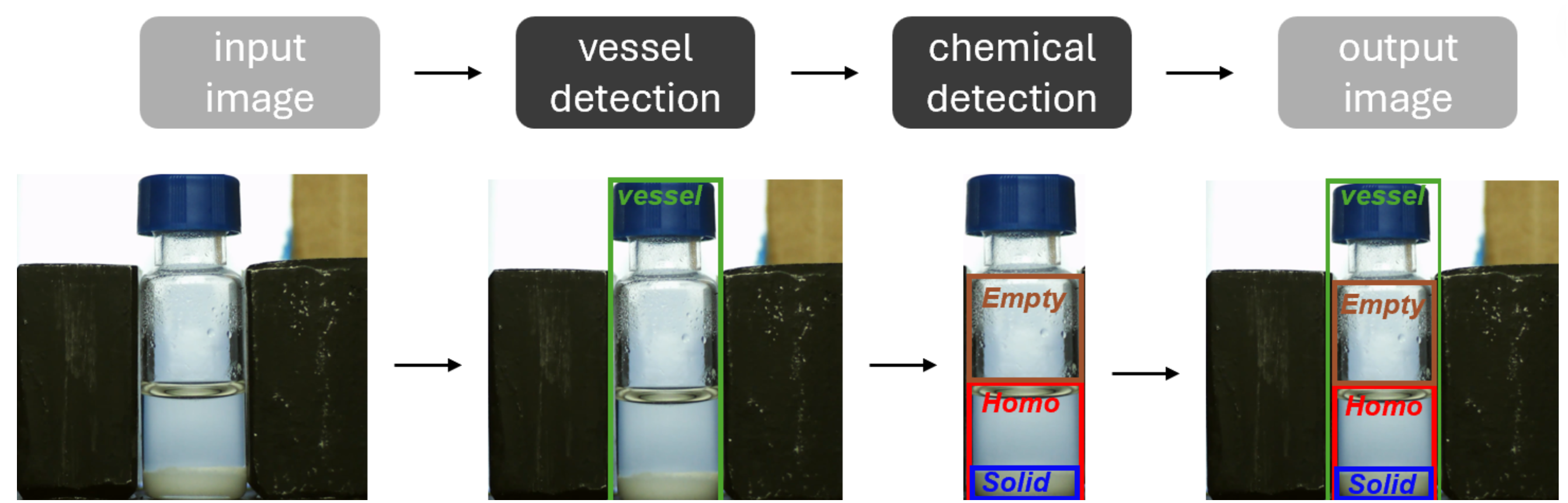

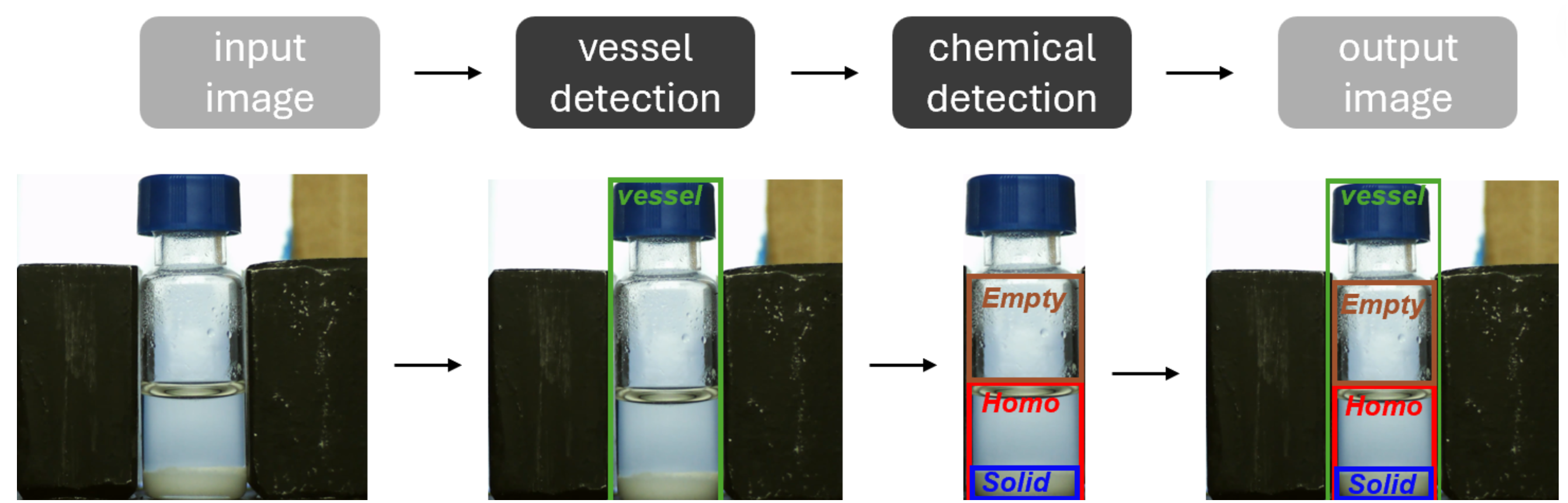

Chemical experiments are governed by macroscopic visual cues such as crystal morphology, turbidity, and phase separation. However, existing image-to-text and image-to-video generative models struggle to synthesize realistic chemical visual cues and accurately model the dynamic behavior of chemical systems. Here, we introduce a computer vision system designed for real-time detection and analysis of visual cues in dynamic chemical processes, spanning solid-solid, solid-liquid, liquid-gas, and solid-gas interactions. Our system enables automated observation of key experimental behaviors, such as dissolution, melting, suspension, mixing, and settling. It employs a hierarchical detection approach with two specialized deep learning models:1. Vessel Detection Model – Identifies transparent laboratory equipment (e.g., reactors, beakers, and vessels). 2. Chemical Detection Model – Detects and classifies chemical phases (gas, liquid, solid) within the identified vessels. The system was trained on a curated dataset of 6,493 images of transparent laboratory vessels and 3,801 images extracted from video recordings of real chemical experiments conducted in laboratory settings. It utilizes YOLO (You Only Look Once), an object detection framework optimized for real-time inference, fine-tuned on the custom dataset. Beyond deep learning, the system integrates traditional image analysis techniques, including turbidity, color, and volume measurements, to enrich visual cue monitoring. This open-source software enhances data-rich experimentation, bridging the gap between automation and chemical process monitoring.